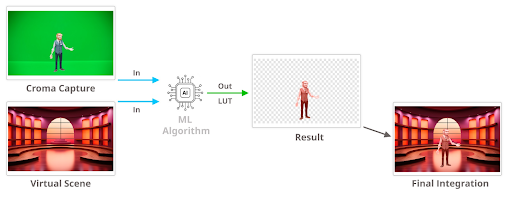

Brainstorm has partnered with Trinity College Dublin (TCD) to advance the development of an innovative machine learning (ML) algorithm designed to automatically color balance virtual video captures in real time. The collaboration aims to ensure that presenter silhouettes seamlessly integrate into rendered virtual scenarios, enhancing the realism and visual coherence of virtual productions.

As part of this initiative, Brainstorm is in the process of creating a virtual video capture and insertion laboratory. This facility is capable of generating synthetic video captures of presenters under configurable lighting conditions, producing virtual scenario renders, and ultimately delivering a fully composed image where both elements blend perfectly.

The ultimate goal of the collaboration is to integrate this ML-powered module into InfinitySet, Brainstorm’s leading virtual production tool. By doing so, the team plans to demonstrate the technology in real virtual production environments, showcasing its effectiveness and versatility.

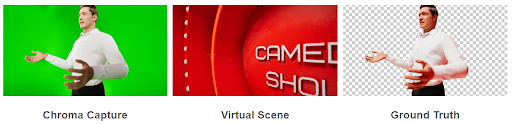

The work accomplished to date involved the implementation of a preliminary virtual laboratory designed to generate sets of images, either manually or with random adjustments. Initial tests have been crucial in establishing a baseline and understanding which parameters should be included in the image generation process for training the algorithm.